Overview

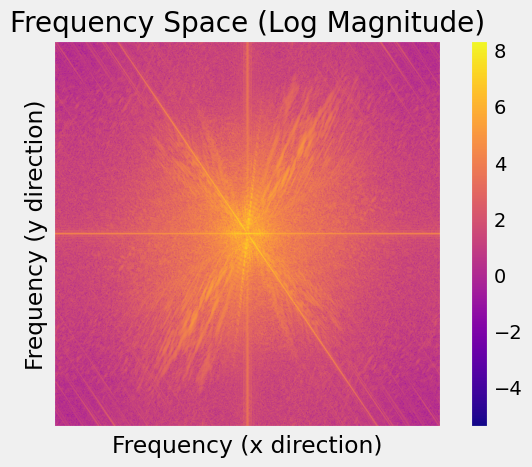

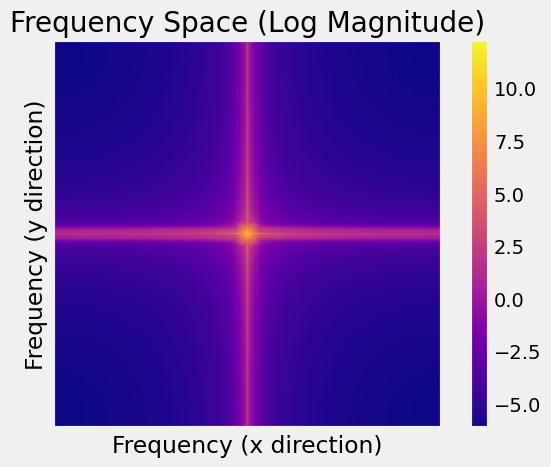

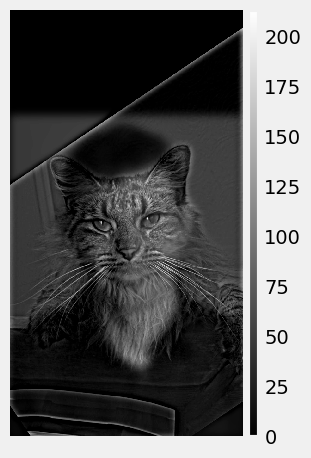

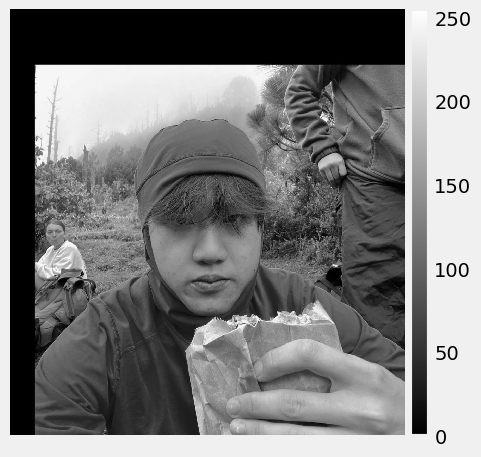

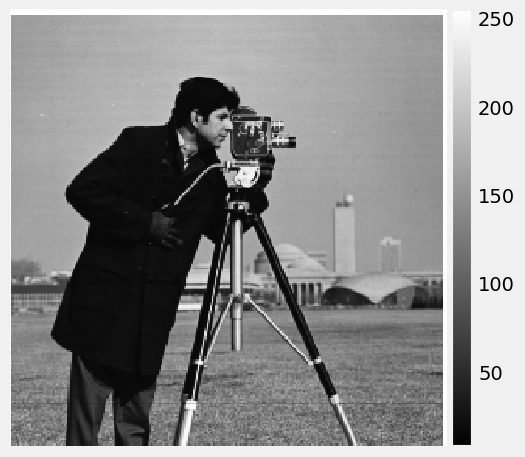

This project explores the frequency domain of images. In the first part, I built an edge detection algorithm using the Finite Derivative Operator and used the Gaussian filter to eliminate the effect of noises in edge detection. In the second part, I tried two ways of image blending. The first method blends the high and low frequencies of two images to create different impressions when looking from far and close distances. The second method used the technology called multiresolution blending introduced in the 1983 paper by Burt and Adelson. This method can create a seamless effect when blending two images with masks.

Part 1: Fun with Filters!

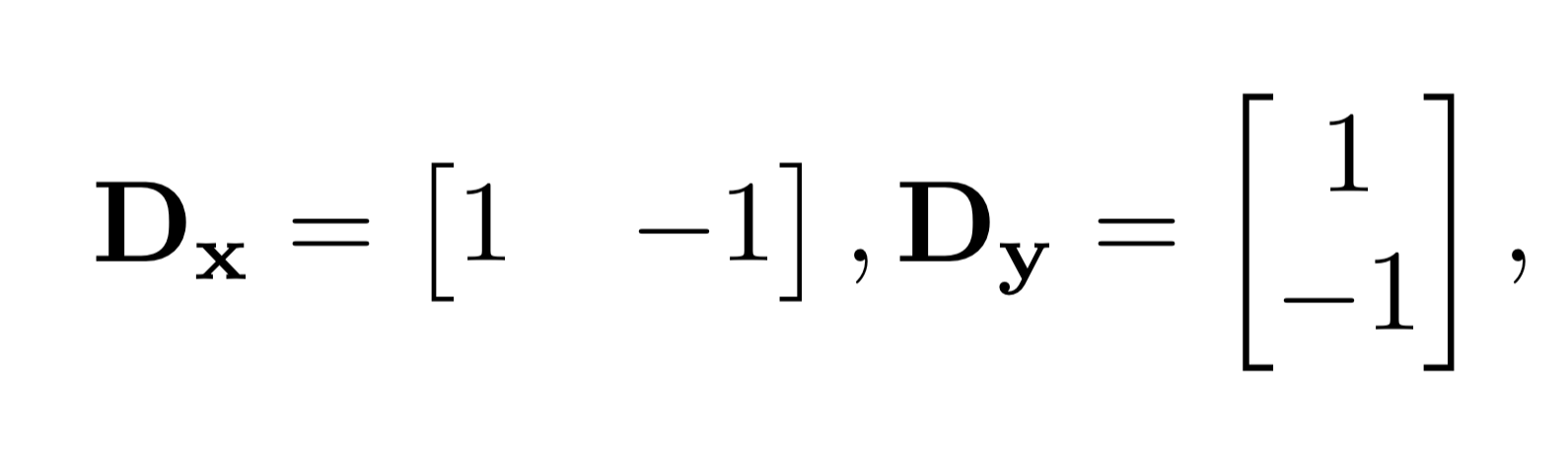

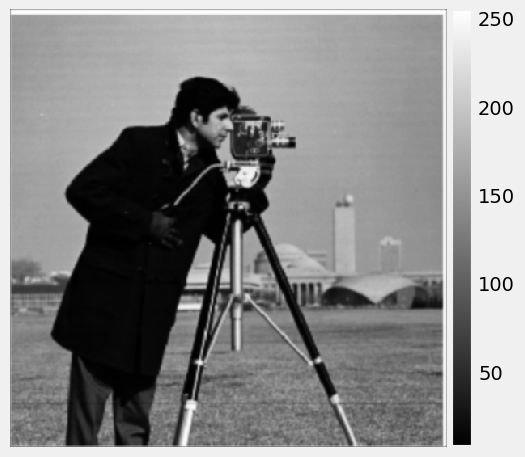

1.1: Finite Derivative Operator

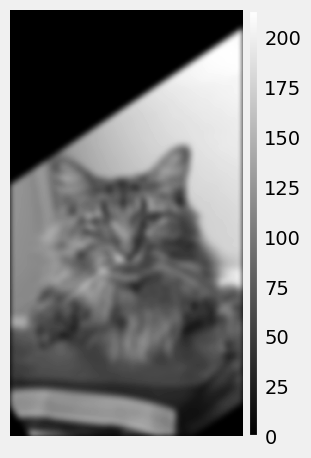

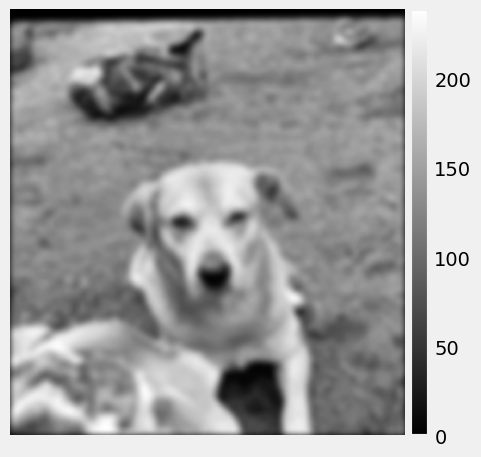

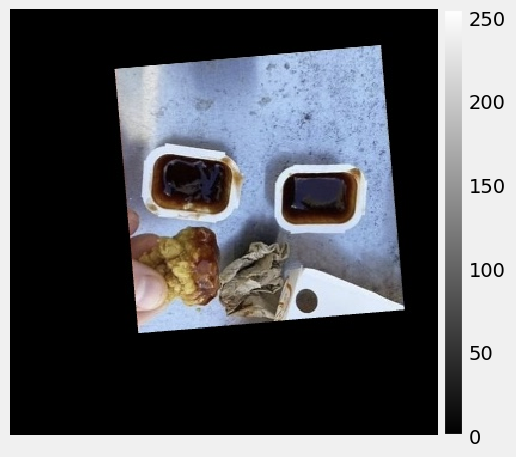

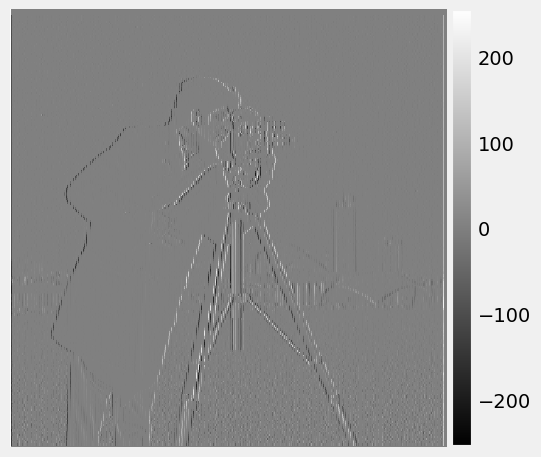

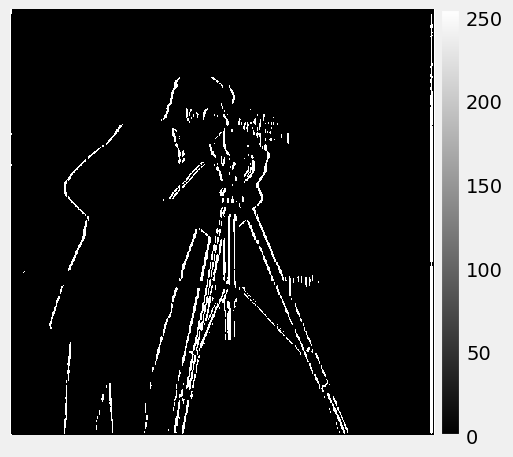

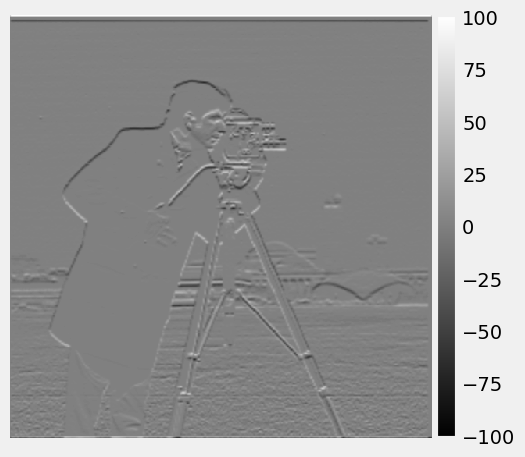

Finite derivative operator is a primitive way to detect edges. In the code, I used two simple convolution kernel to detect edges horizontally and vertically.

I used `signal.convolve2d` from the SciPy library to convolve the image with the two kernels. The result of this convolution is the gradient of the image.

|

|

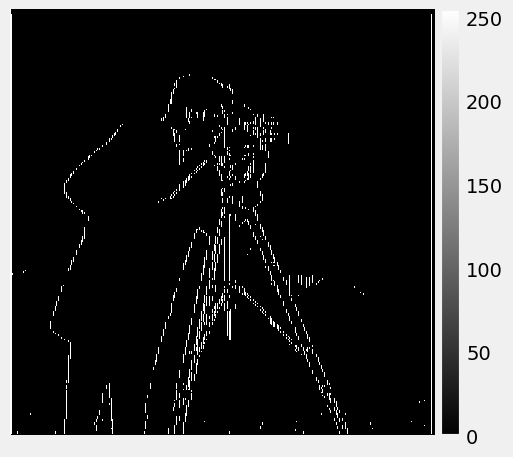

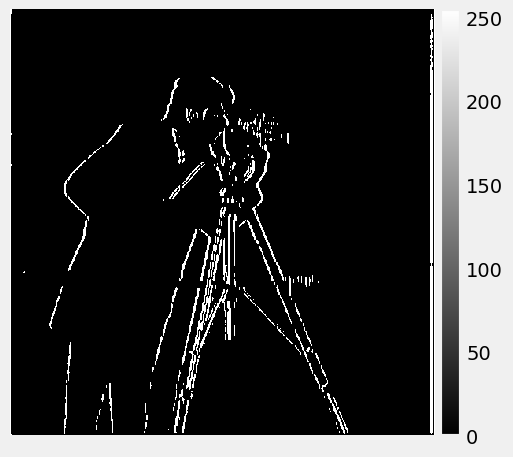

To create a better result, I binarized the gradient images to make the edges obvious. I choose a threshold of 0.25 (in range [0, 1]) so that any pixel under this value will be black and above this value will be white.

|

|

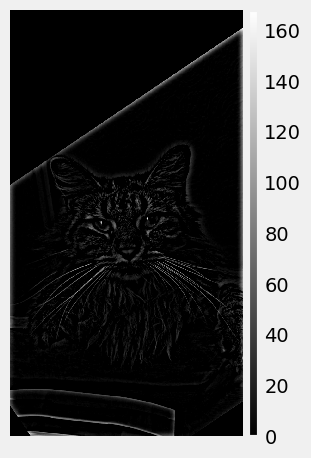

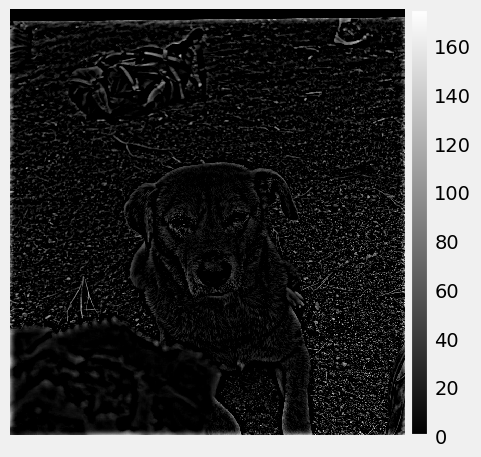

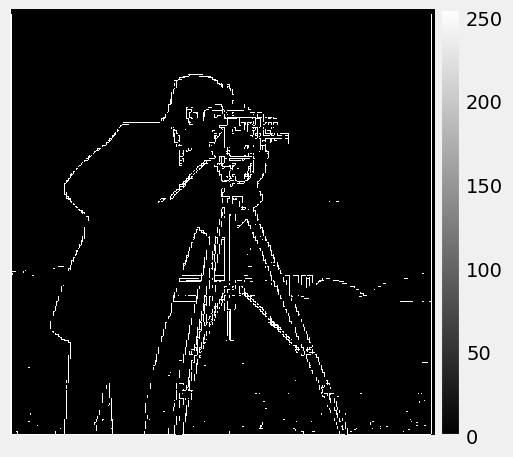

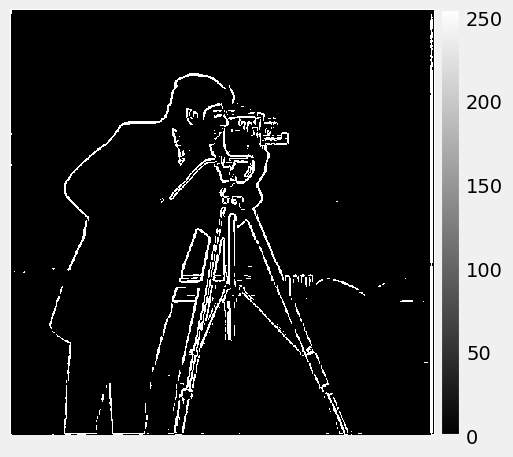

Combining the two edges using `np.sqrt(edge_imgX ** 2 + edge_imgY ** 2)` (l2 norm) can create the complete edge image:

|

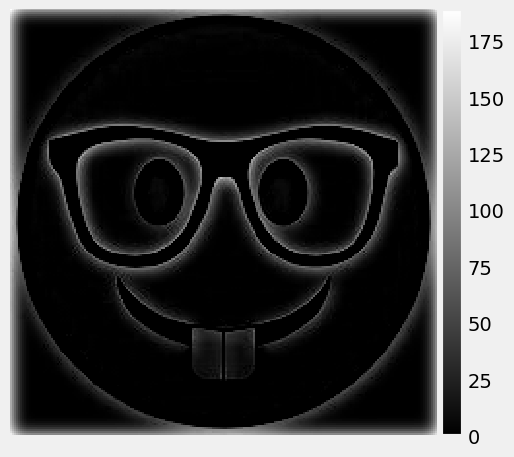

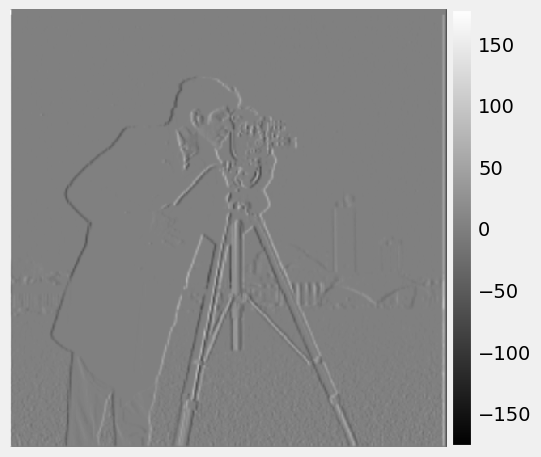

1.2: Derivative of Gaussian (DoG) Filter

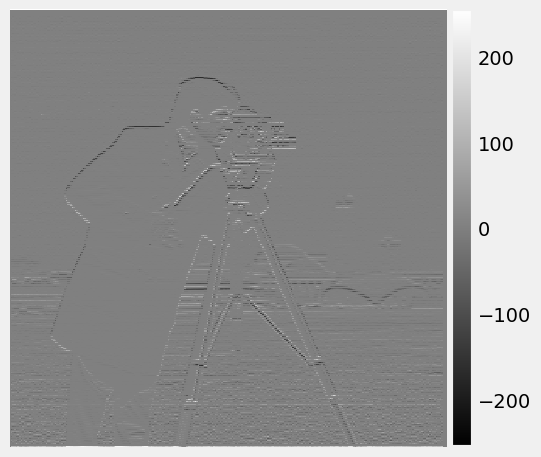

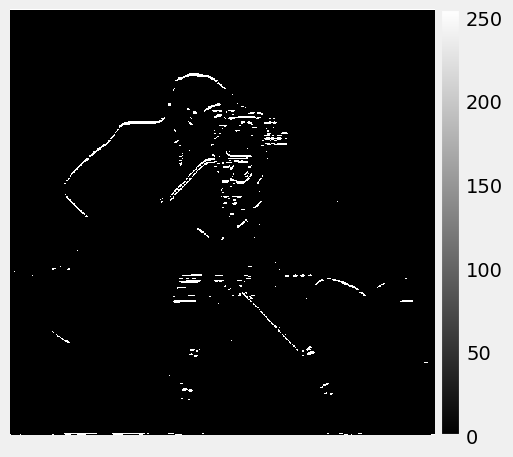

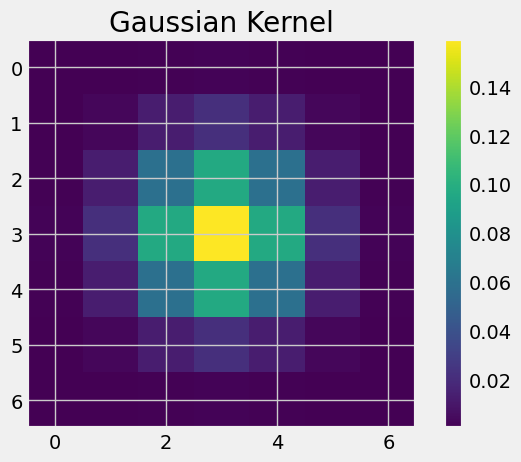

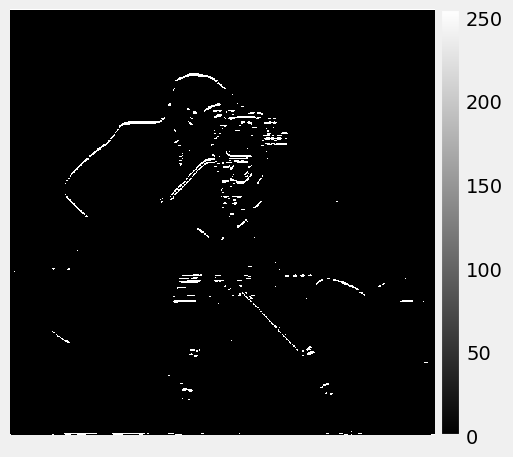

It's easy to notice that there is noise on the background. Applying a Gaussian filter can be a good way to get rid of the noise. I created the Gaussian filter by first called cv2.getGaussianKernel() to create a 1D filter. Then, I calculated the outer product of two 1d Gaussian filter to create a 2D Gaussian convolution kernel. Then I convolved the image using the Gaussian kernel. It creates blurred images that also eliminate the noise.

|

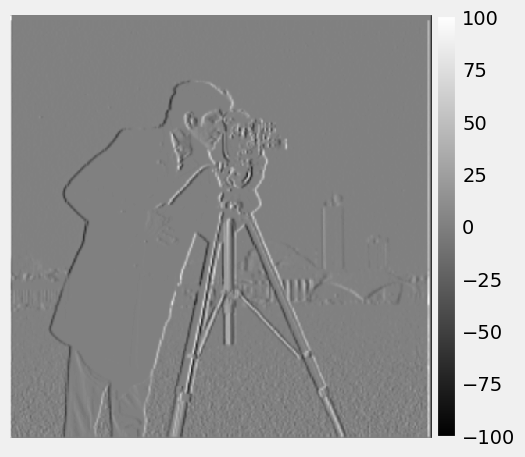

I can now take the finite derivative operator on the blurred image to achieve a better result from edge detection. We can see from the result that the detected edges are smoother and less noise.

|

|

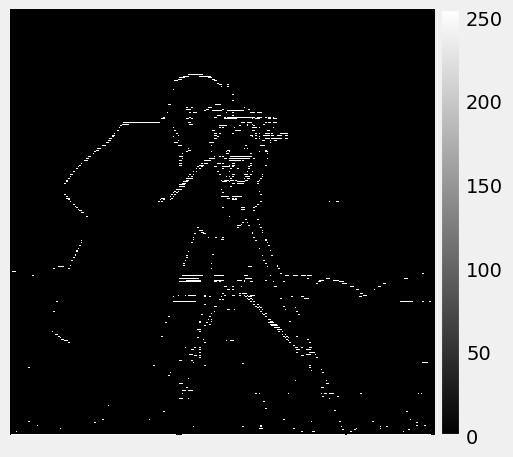

Choose 0.1 as the threshold for binarizing:

|

|

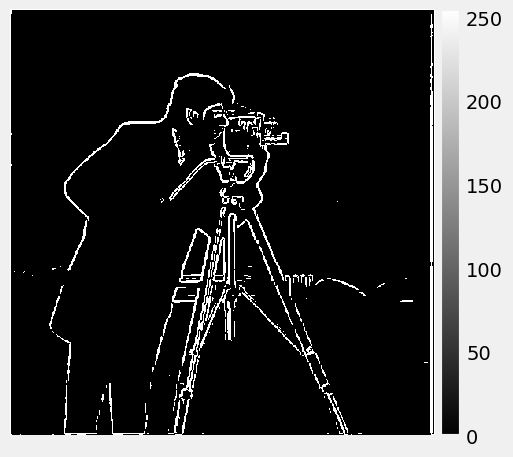

Combining the two edge images using the algorithm above creates this:

|

|

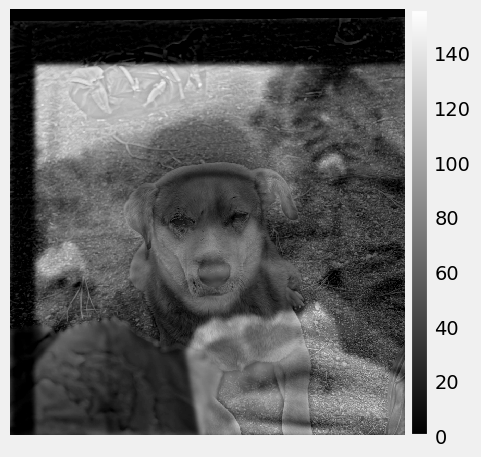

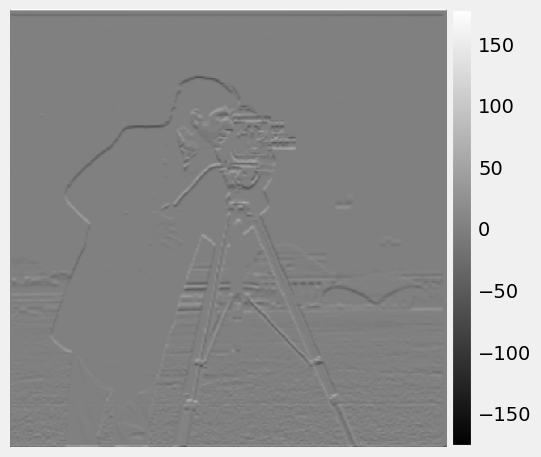

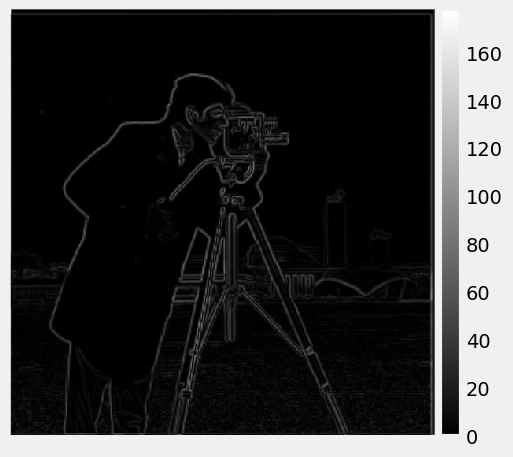

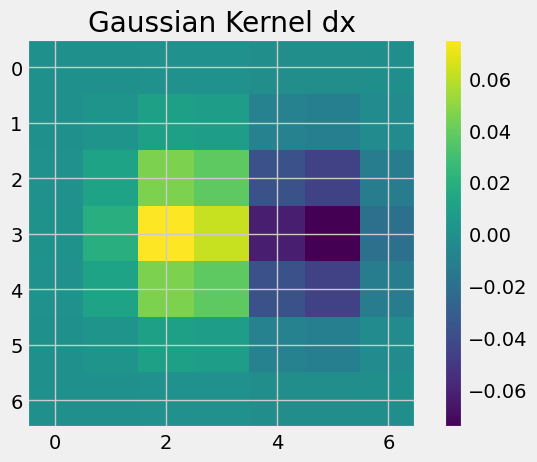

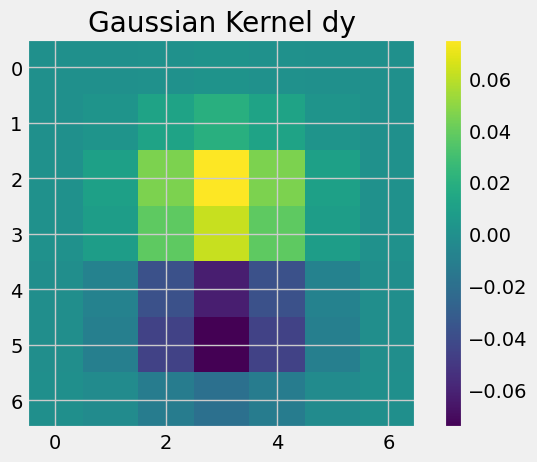

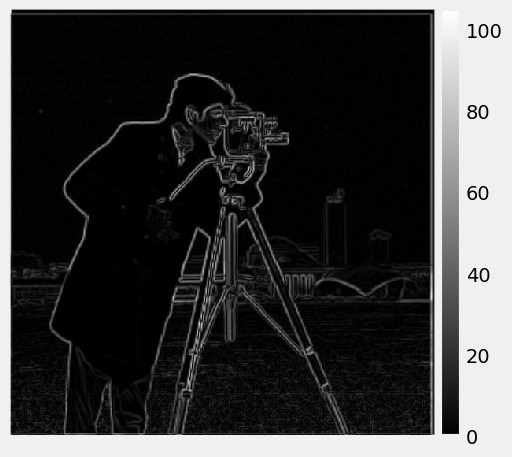

Since both applying the Gaussian filter and taking the finite derivative are linear operation, the order of the operations does not matter. I could take the finite derivative on the Gaussian filter first and then apply the derivative of Gaussian on the image.

|

|

|

|

|

Still choose 0.1 as the threshold for binarizing:

|

|

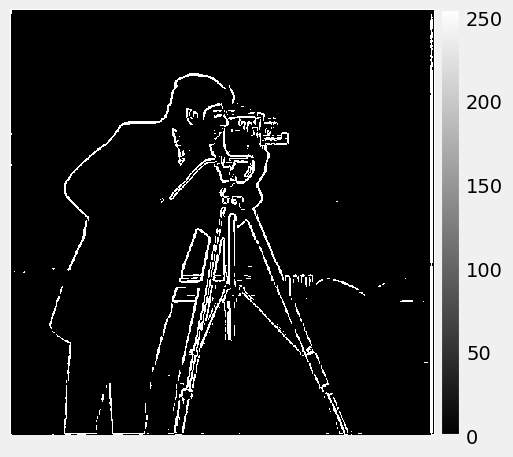

Combining the two edge images using the algorithm above creates this:

|

|

We can see either ways create mostly identical results except for some minor differences. The two binarized images are exactly the same under the same threshold. This is becasue finite derivative and Gaussian filter are both linear process. Hence the order of the two manipulation does not matter.

|

|

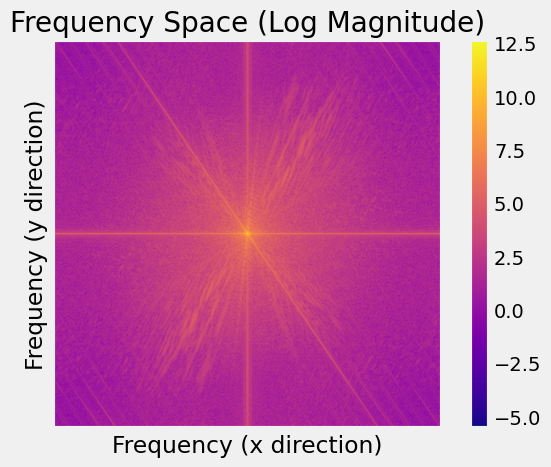

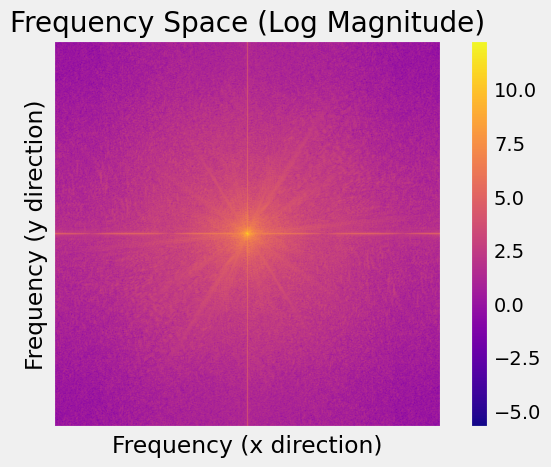

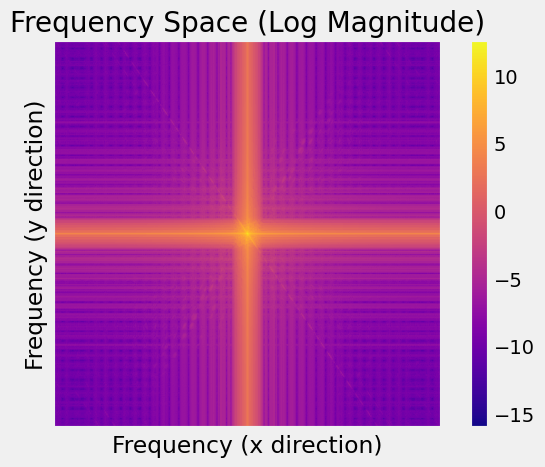

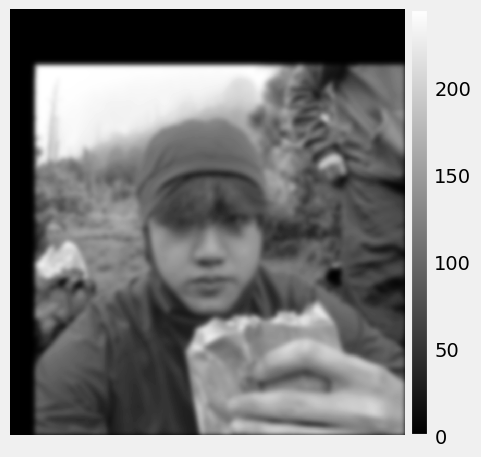

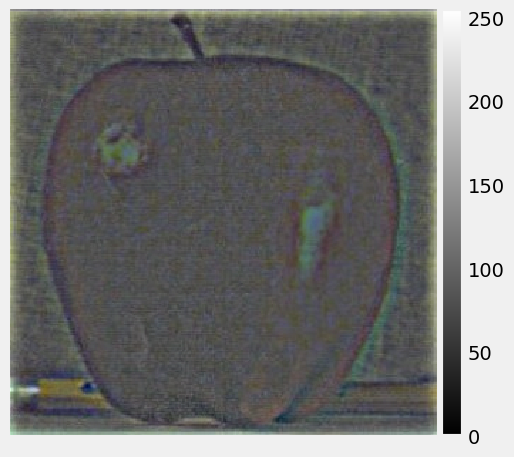

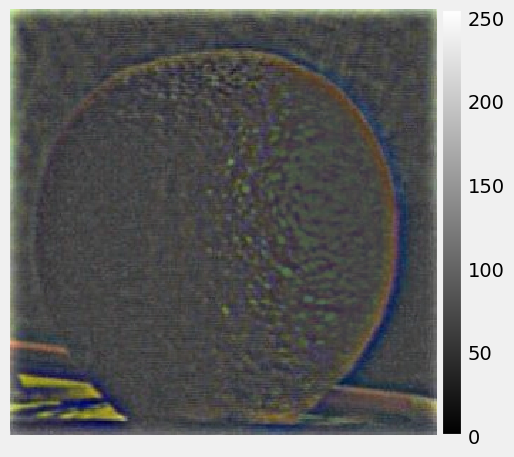

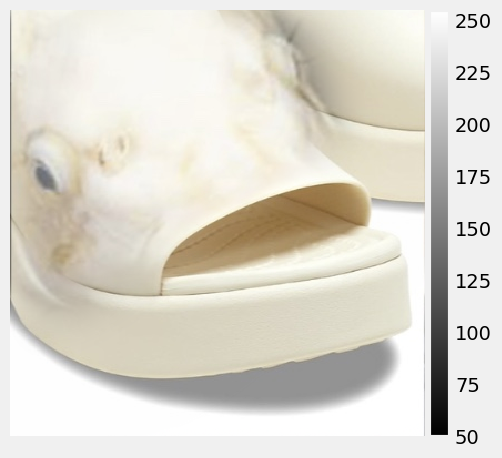

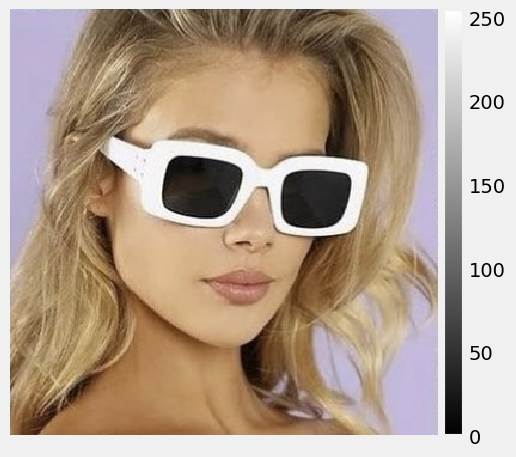

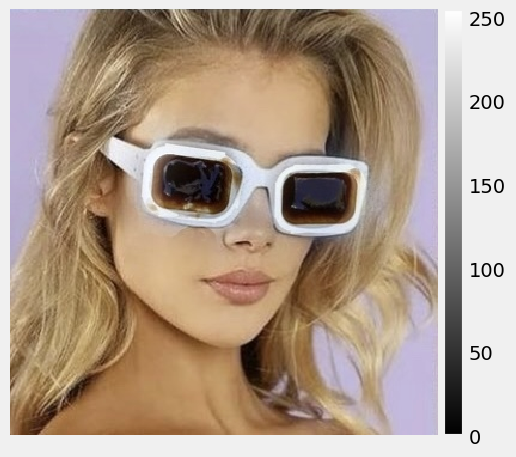

Part 2: Fun with Frequencies!

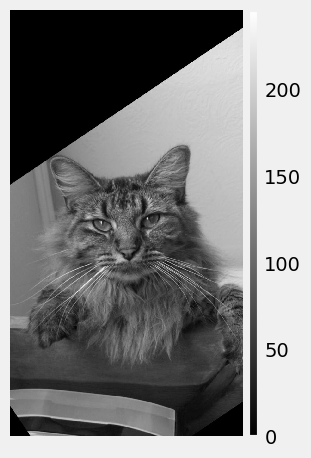

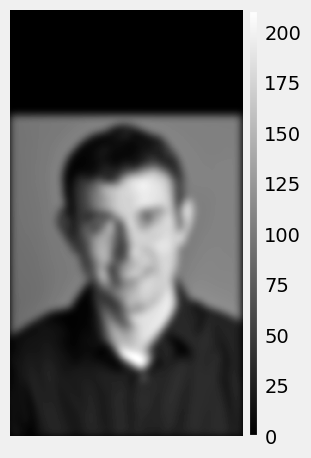

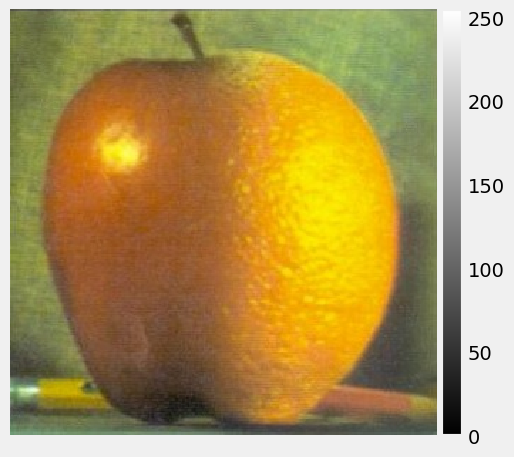

2.1 Image Sharpening

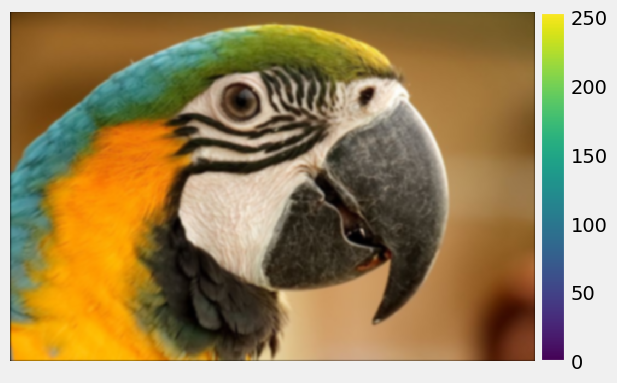

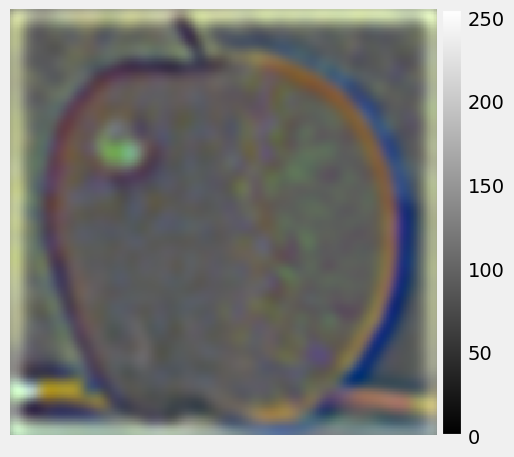

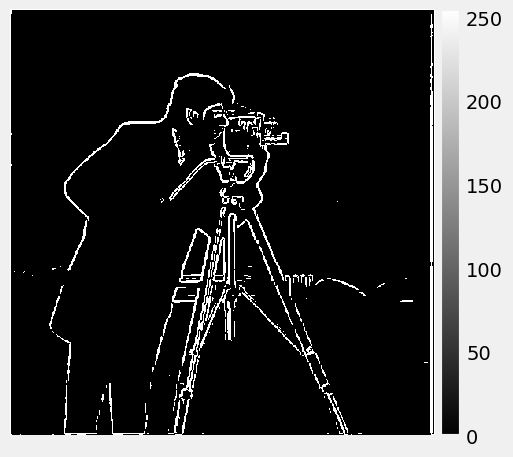

The idea of image sharpening is to add more high frequency content to the image. Adding high frequency content can make the image look like having more details. To extract the high frequency content, we first create a blurred version of the image (represent to low frequency part). Then we subtract the original image with the blurred image. Finally, we could add the extracted high frequency content to the original image to create a sharpen image.

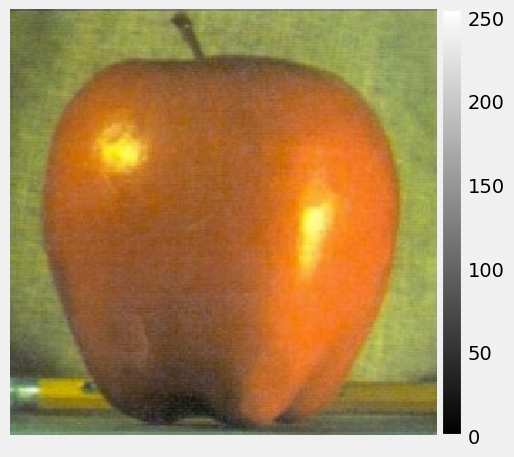

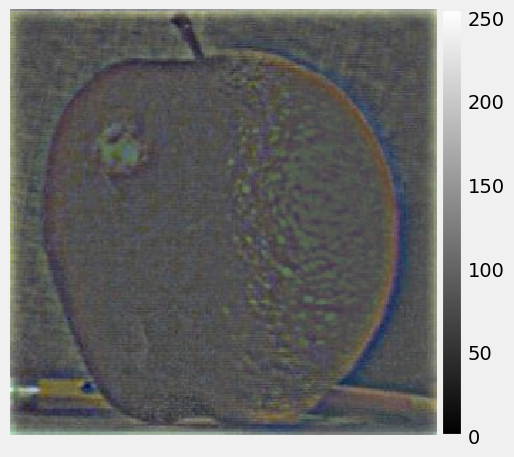

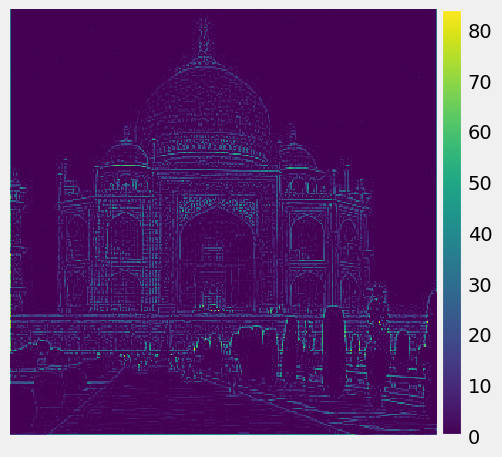

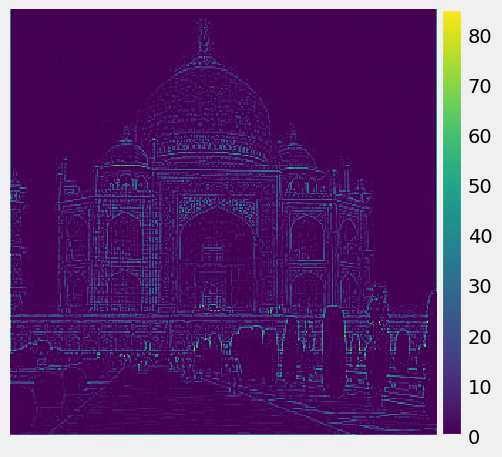

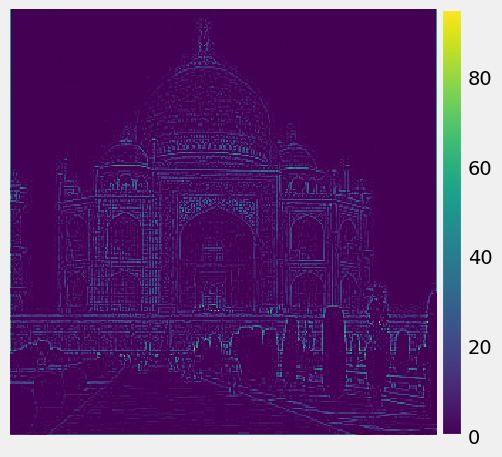

High frequency of the R, G, B channels of Taj:

|

|

|

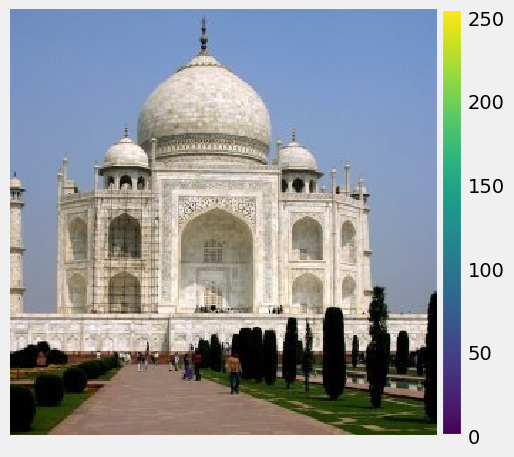

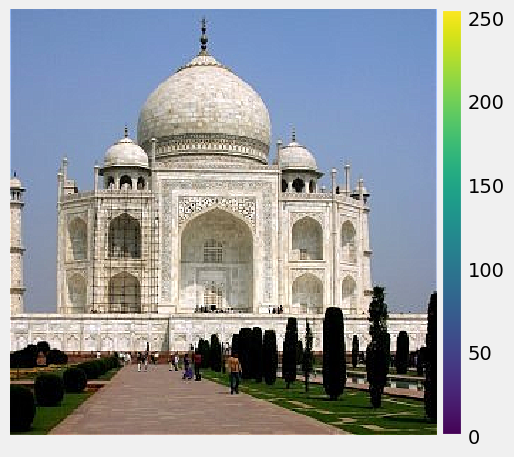

Adding high frequency to the original image:

|

|

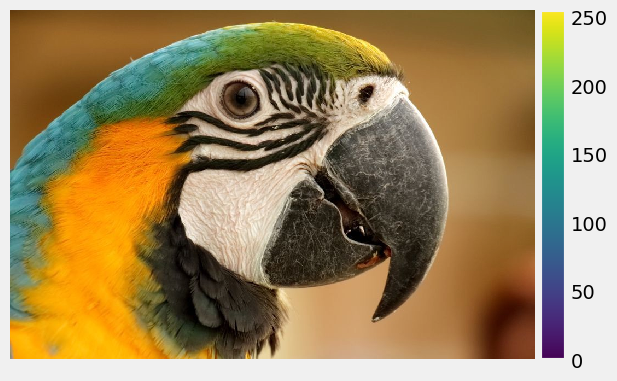

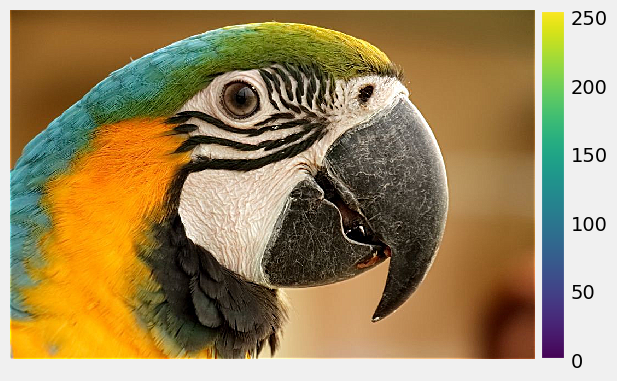

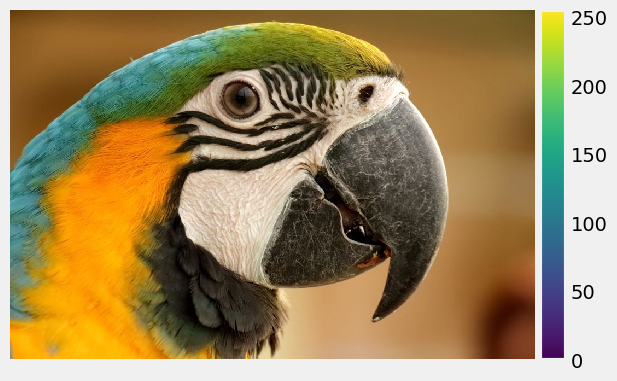

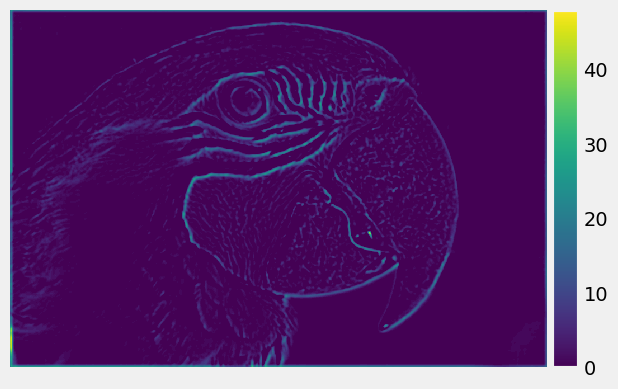

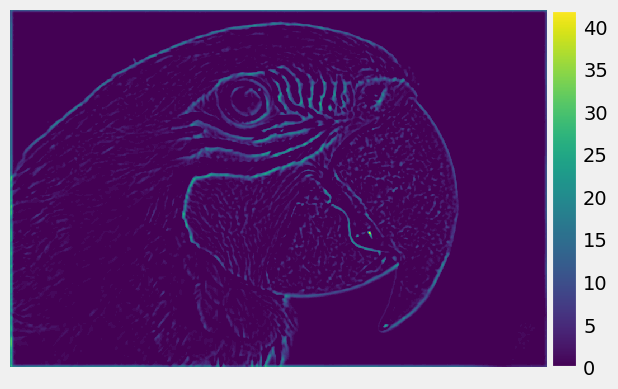

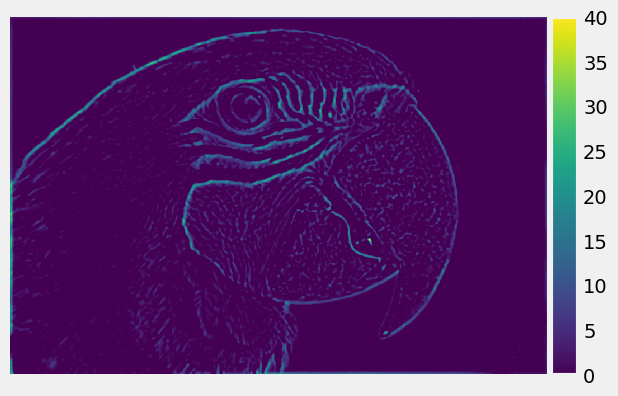

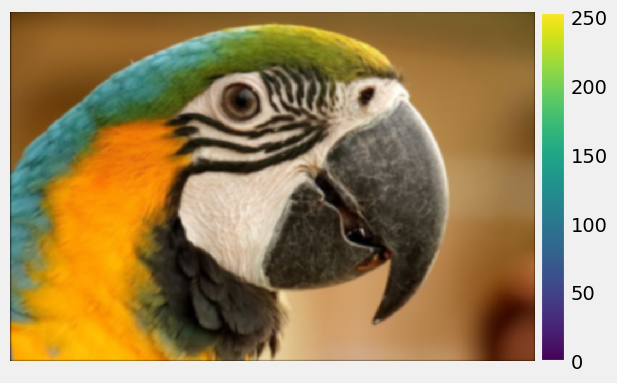

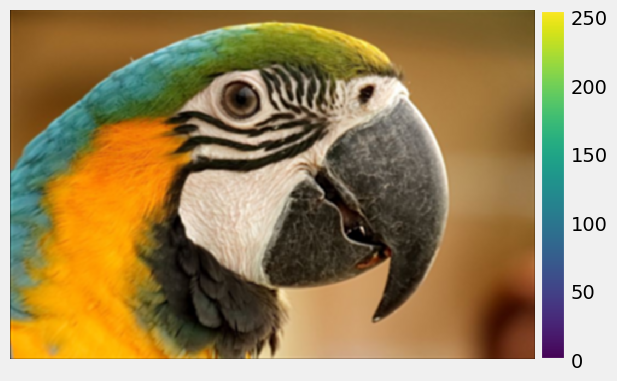

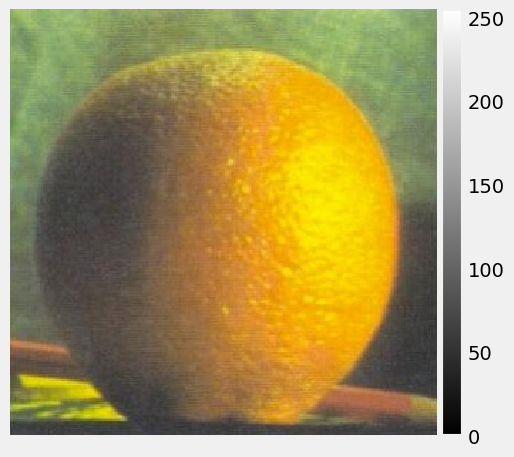

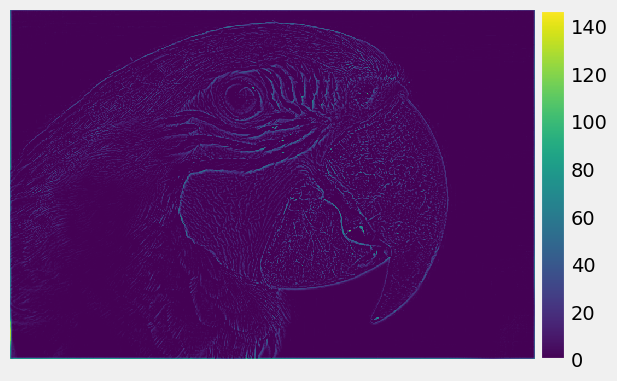

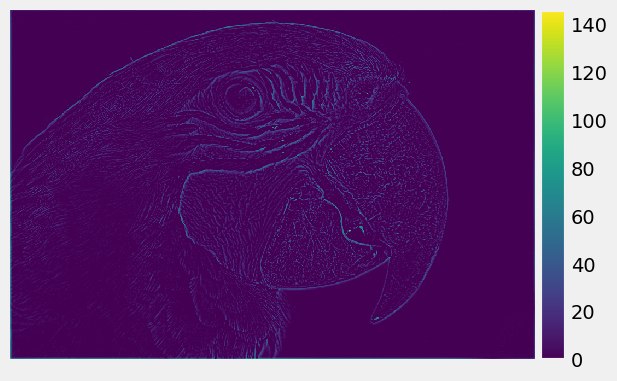

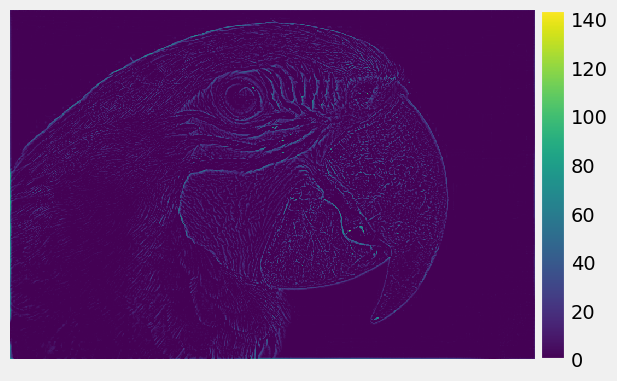

High frequency of the R, G, B channels of Parrot:

|

|

|